Opencv Python实现两幅图像匹配

本文实例为大家分享了Opencv Python实现两幅图像匹配的具体代码,供大家参考,具体内容如下

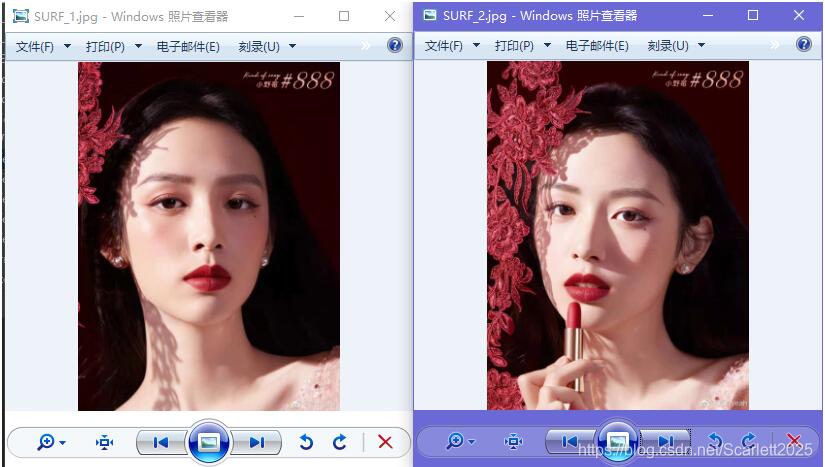

原图

import cv2img1 = cv2.imread(’SURF_2.jpg’, cv2.IMREAD_GRAYSCALE)img1 = cv2.resize(img1,dsize=(600,400))img2 = cv2.imread(’SURF_1.jpg’, cv2.IMREAD_GRAYSCALE)img2 = cv2.resize(img2,dsize=(600,400))image1 = img1.copy()image2 = img2.copy()#创建一个SURF对象surf = cv2.xfeatures2d.SURF_create(25000)#SIFT对象会使用Hessian算法检测关键点,并且对每个关键点周围的区域计算特征向量。该函数返回关键点的信息和描述符keypoints1,descriptor1 = surf.detectAndCompute(image1,None)keypoints2,descriptor2 = surf.detectAndCompute(image2,None)# print(’descriptor1:’,descriptor1.shape(),’descriptor2’,descriptor2.shape())#在图像上绘制关键点image1 = cv2.drawKeypoints(image=image1,keypoints = keypoints1,outImage=image1,color=(255,0,255),flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)image2 = cv2.drawKeypoints(image=image2,keypoints = keypoints2,outImage=image2,color=(255,0,255),flags=cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)#显示图像cv2.imshow(’surf_keypoints1’,image1)cv2.imshow(’surf_keypoints2’,image2)cv2.waitKey(20)matcher = cv2.FlannBasedMatcher()matchePoints = matcher.match(descriptor1,descriptor2)# print(type(matchePoints),len(matchePoints),matchePoints[0])#提取强匹配特征点minMatch = 1maxMatch = 0for i in range(len(matchePoints)): if minMatch > matchePoints[i].distance:minMatch = matchePoints[i].distance if maxMatch < matchePoints[i].distance:maxMatch = matchePoints[i].distance print(’最佳匹配值是:’,minMatch) print(’最差匹配值是:’,maxMatch)#获取排雷在前边的几个最优匹配结果goodMatchePoints = []for i in range(len(matchePoints)): if matchePoints[i].distance < minMatch + (maxMatch-minMatch)/16:goodMatchePoints.append(matchePoints[i])#绘制最优匹配点outImg = NoneoutImg = cv2.drawMatches(img1,keypoints1,img2,keypoints2,goodMatchePoints,outImg, matchColor=(0,255,0),flags=cv2.DRAW_MATCHES_FLAGS_DEFAULT)cv2.imshow(’matche’,outImg)cv2.waitKey(0)cv2.destroyAllWindows()

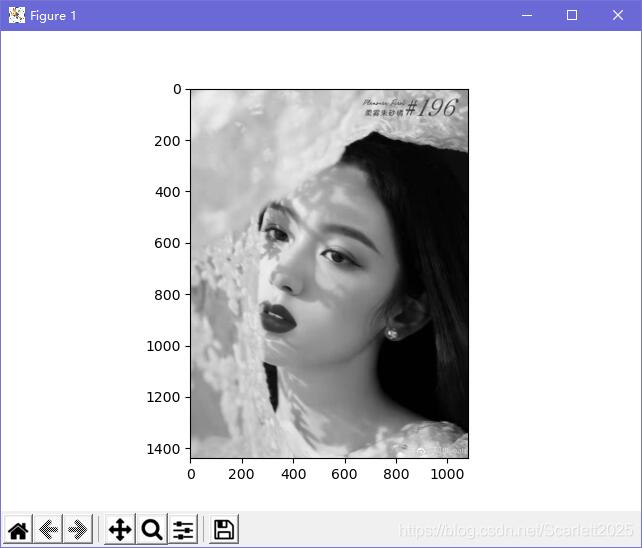

原图

#coding=utf-8import cv2from matplotlib import pyplot as pltimg=cv2.imread(’xfeatures2d.SURF_create2.jpg’,0)# surf=cv2.SURF(400) #Hessian阈值400# kp,des=surf.detectAndCompute(img,None)# leng=len(kp)# print(leng)# 关键点太多,重取阈值surf=cv2.cv2.xfeatures2d.SURF_create(50000) #Hessian阈值50000kp,des=surf.detectAndCompute(img,None)leng=len(kp)print(leng)img2=cv2.drawKeypoints(img,kp,None,(255,0,0),4)plt.imshow(img2)plt.show()# 下面是U-SURF算法,关键点朝向一致,运算速度加快。surf.upright=Truekp=surf.detect(img,None)img3=cv2.drawKeypoints(img,kp,None,(255,0,0),4)plt.imshow(img3)plt.show()#检测关键点描述符大小,改64维成128维surf.extended=Truekp,des=surf.detectAndCompute(img,None)dem1=surf.descriptorSize()print(dem1)shp1=des.shape()print(shp1)

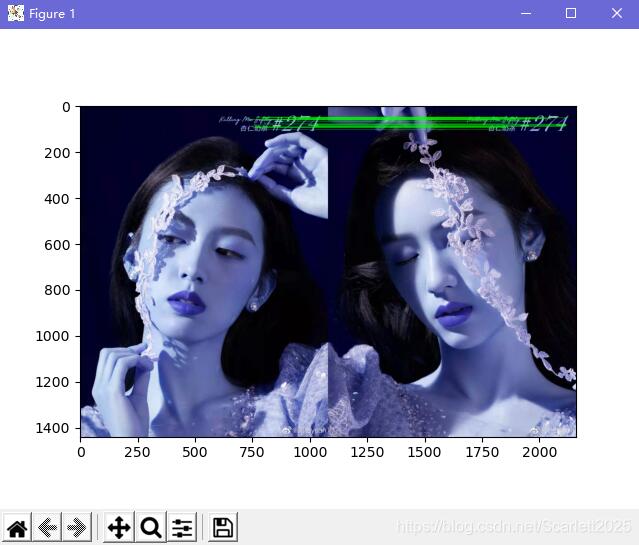

效果图

import cv2from matplotlib import pyplot as pltleftImage = cv2.imread(’xfeatures2d.SURF_create_1.jpg’)rightImage = cv2.imread(’xfeatures2d.SURF_create_2.jpg’)# 创造siftsift = cv2.xfeatures2d.SIFT_create()kp1, des1 = sift.detectAndCompute(leftImage, None)kp2, des2 = sift.detectAndCompute(rightImage, None) # 返回关键点信息和描述符FLANN_INDEX_KDTREE = 0indexParams = dict(algorithm=FLANN_INDEX_KDTREE, trees=5)searchParams = dict(checks=50) # 指定索引树要被遍历的次数flann = cv2.FlannBasedMatcher(indexParams, searchParams)matches = flann.knnMatch(des1, des2, k=2)matchesMask = [[0, 0] for i in range(len(matches))]print('matches', matches[0])for i, (m, n) in enumerate(matches): if m.distance < 0.07 * n.distance:matchesMask[i] = [1, 0]drawParams = dict(matchColor=(0, 255, 0), singlePointColor=None, matchesMask=matchesMask, flags=2) # flag=2只画出匹配点,flag=0把所有的点都画出resultImage = cv2.drawMatchesKnn(leftImage, kp1, rightImage, kp2, matches, None, **drawParams)plt.imshow(resultImage)plt.show()

以上就是本文的全部内容,希望对大家的学习有所帮助,也希望大家多多支持好吧啦网。

相关文章:

1. CSS Hack大全-教你如何区分出IE6-IE10、FireFox、Chrome、Opera2. 在 XSL/XSLT 中实现随机排序3. asp(vbs)Rs.Open和Conn.Execute的详解和区别及&H0001的说明4. XML在语音合成中的应用5. Vue Element UI 表单自定义校验规则及使用6. JavaScript避免嵌套代码浅析7. 用css截取字符的几种方法详解(css排版隐藏溢出文本)8. chatGPT教我写compose函数的详细过程9. 《CSS3实战》笔记--渐变设计(一)10. CSS3实例分享之多重背景的实现(Multiple backgrounds)

网公网安备

网公网安备